CDC Promotes Another Misleading Mask Study

The CDC clearly disgraced itself during the pandemic.

At this point, that’s not particularly newsworthy — it’s become an expectation that the CDC will release a new flawed “study” every few weeks in an effort to promote their policy goals.

The interventions and policies championed by the CDC haven’t worked, both domestically or globally. The policy failings are so extensive they could quite easily fill a book.

Their MMWR (Morbidity and Mortality weekly report) releases, or as they should be known, policy advocacy dressed up as “science,” have caused incalculable damage. Politicians and teachers’ unions have been given complete authority to enforce mask mandates and other policies designed to continue indefinitely during seasonal surges.

Based on the CDC’s extensive track record, it’s possible that the latest bit of scientific propaganda from NIH is merely their best attempt to grab some of that power for themselves. After witnessing the incredibly poor work by the CDC, they must have thought to themselves, “We can’t let them show us up like this! We can conduct absurdly bad ‘studies’ meant to ensure endless masking too!”

And that’s exactly what they did.

It should come as no surprise given how unimaginably horrendous former NIH director Francis Collins was at science, which of course earned him a promotion to the White House. But if you haven’t already come across the organization’s attempt at mask advocacy, it’s important to break down just how contemptible it is.

The NIH, the CDC, NAIAD…all of these organizations are raging against the dying of the light; trying their best to justify their stunningly dramatic reversal on mask mandates. Science and evidence be damned.

They’re so desperate, they’ll resort to anything. And this “study” is the proof.

Sample Size

If you haven’t already seen it, the study has been posted as a preprint, with NIH gleefully releasing the results to the press several days ago. As always, their purposefully misleading conclusions were ready made for media consumption.

You can only imagine the attention this would be getting if the media and the public weren’t so understandably distracted by the war in Ukraine.

The study had admirable goals — an attempt to assess the importance of masking in preventing “secondary” cases. Primary cases are defined as infections that came from the community, while secondary cases refer to transmission that seemingly occurred in schools.

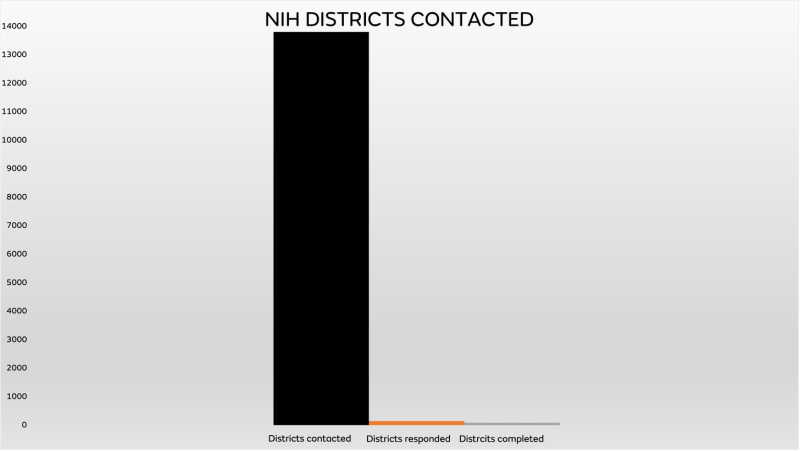

To do this, the researchers contacted 13,800 school districts; 143 responded with interest in filling out a survey, while 85 completed the survey. Here’s how that looks visually:

Immediately, the problems are noticeable.

When contacting that many districts and only 85 out of 13,800 actually complete the survey, they’re likely pre-selecting for districts convinced their policies mattered. And only 61 of the 85 consistently reported data that could be used for their results.

But don’t worry, it gets so, so much worse.

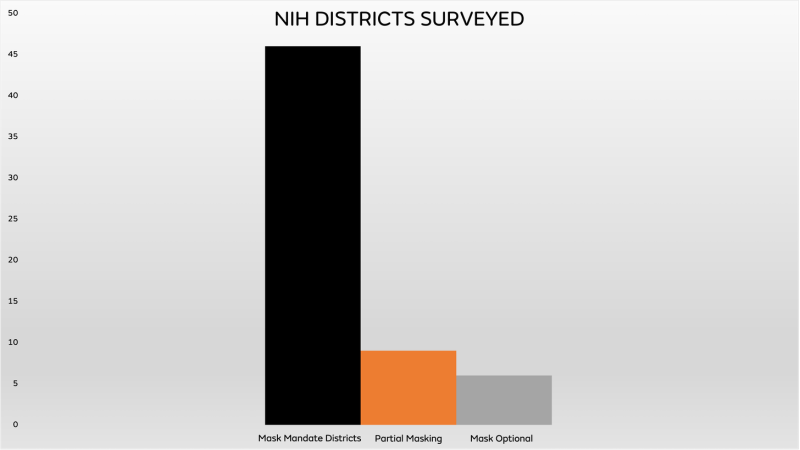

Of the 61 districts where the results were tracked, the breakdown of forced vs. optional masking was unbelievably lopsided.

I mean, really, REALLY lopsided:

Out of 61 school districts included — 6 were mask-optional. Less than 10%.

How is that remotely useful? These aren’t comparable data sets. It’s not balanced, 30 vs. 30, for example.

But it gets worse. So, so much worse.

The six mask-optional districts that completed reporting throughout the study period were tiny. This makes sense, given that the vast majority of schools had mask mandates during the study period and most schools without mandates in 2021 were likely in smaller jurisdictions, but it’s stunning to visually examine the difference in size between the cohorts:

Yeah. It’s bad.

Nearly 1.1 million students were tracked in the mask-mandate districts while only 3,950 were tracked in mask-optional districts.

You’d think that would raise some alarm bells, but that would require intellectual honesty.

Just take a minute to imagine that the statistics were reversed. Imagine that a study of 1,100,000 students who never wore masks vs. 3,950 that did was released by independent researchers showing mask mandates in schools were completely ineffective. Do you think the results would have been published?

And even if they were published, do you think that they would be mindlessly repeated, without criticism, by The Experts™ and the media? I wonder if any of the Twitter doctors who relentlessly push masking would have a problem with the difference in sample sizes?

How did this get released? It’s completely ludicrous. How can anyone take this disparity seriously?

The researchers claim in their notes that in some of their analyses they attempted to adjust their results for size by removing large school districts of over 20,000 students, for example, but that doesn’t eliminate the vast disparity that all six mask-optional districts had 3,950 students combined. It’s truly unbelievable.

But of course, it doesn’t stop there.

Case Definitions

I am not the first to notice the inherent problems with case definitions that could cause significant issues with the conclusions reached in this study.

As previously mentioned, the researchers looked at “secondary” infections as the main outcome of interest. They essentially classified “primary,” or community infections as being unrelated to school masking policies.

However, CDC guidance on contact tracing instructs schools to treat masked interactions very differently. If districts followed that guidance, masked students who were within 3-6 feet of masked, COVID-positive students are not classified as a “close contact.”

Tracy Høeg left this comment on the study website which explains why ignoring this variable could render the conclusions essentially useless:

The recent article by Boutzoukas et al [1] analyzed the association of universal vs. partial vs. optional school masking policies with secondary in-school infection and found an unexpectedly-strong association between masking policies and secondary infections given recent studies [2,3]. Unfortunately, it appears the authors have failed to consider at least one critically important confounding variable. The CDC states that “the close contact definition excludes students who were between 3 to 6 feet of an infected student if both the infected student and the exposed student(s) correctly and consistently wore well-fitting masks the entire time.” We are aware of numerous districts across the country where contact tracing during the time period of the study [1] would not have correctly identified COVID-19 cases truly transmitted in the school to have come from the school because a masked student transmitting to another masked student would not have been considered a close contact according to CDC policy. This would lead to in-school transmission cases in districts with mask mandates being overlooked by contact tracers and incorrectly considered community transmission, giving falsely low rates of secondary transmission in districts with mask requirements. Potentially related, Boutzoukas et al [1] found unexpectedly higher rates of primary infections (or community transmission) in the universal vs. optional masking districts (125.6/1000 vs. 38.9/1000) which could at least partially be due to the close contact policy mentioned above; if secondary infections were systematically and inappropriately considered primary infections in mask-mandate districts, this would have led to secondary infections being misclassified as primary infections coming from the community. This would have increased primary infection rates while lowering secondary infection rates in universal masking districts. The association observed by Boutzoukas et al [1] between masking and secondary transmission may alone have been attributable to different contact tracing policies and not due to masks at all. We worry that a policy which does not consider masked transmission in schools makes the study a self-fulfilling prophecy: the expected result is lower identified secondary transmission rates in masking districts simply due to this policy. If contact tracers discount the possibility of in-school transmission because a student was masked, as the CDC instructs; even if this only occurs in some schools, that would be sufficient to cloud the entire study’s results.

Because the CDC assumes masks work (lol), they specifically instructed schools to treat possible transmission between two masked students differently, leading to contact tracers potentially mislabeling those who wore masks as “primary” infections.

By wearing a mask, you are no longer a “close contact” of another infected student who also wore a mask. How the CDC managed to justify that policy should be grounds for an entire psychological study in and of itself, but it’s virtually impossible to overstate how much of an impact that could have in contact tracing data among these schools.

Most school mask mandate studies haven’t specifically examined secondary transmission as the main outcome, but this investigation was attempting to quantify the difference in rates between primary and secondary transmission. This ignores the possibility that schools could mislabel cases as occurring in the community when they actually occurred in schools and should be completely disqualifying.

But it raises another issue that Tracy doesn’t mention — when taking their results at face value and assuming the numbers are accurate, it stands to reason that the vast disparity in primary cases could lead to higher levels of natural immunity in the masked districts that would potentially reduce the odds of secondary transmission.

Simply, if you have more students in a district that have already been infected, they’re less likely to transmit and there are fewer susceptible students that can become infected.

Either way you look at it, the enormous implications raise HUGE red flags.

Primary vs. Secondary Cases

One of the key elements of study advocacy that Experts™ and researchers count on is the certainty that no one will actually read the tables.

These are the underlying data sets used to inform the abstract and summary. They’re always buried at the end of the text, and usually omitted from the press release advocating for endless masking.

So many poorly conducted studies fall apart once you study the tables and learn what the data actually says. This one is no exception.

As covered above, the rates of primary infections were significantly higher in mask mandate districts.

Consider this unexplored question: It’s far more likely that students and staff that attended schools with mask mandates would also live in a community with an active general mask mandate. It’s unlikely a location, especially in 2021, would have a mask mandate exclusively in schools (ahem NYC), isn’t it?

So why would the community rates be dramatically higher for those living under a general mask mandate?

You’d have to wonder what that says about the efficacy of mask mandates, wouldn’t you? I’m sure the researchers will be exploring that question shortly.

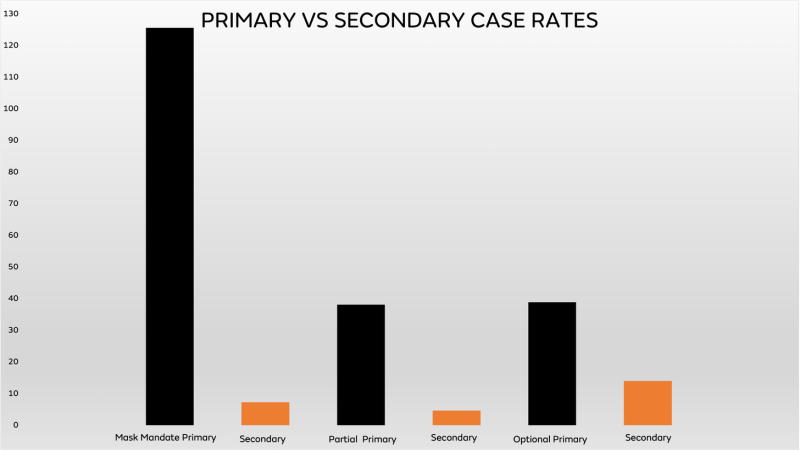

Even ignoring that, in this study, the difference in rates shows how wildly unreliable the dataset actually is:

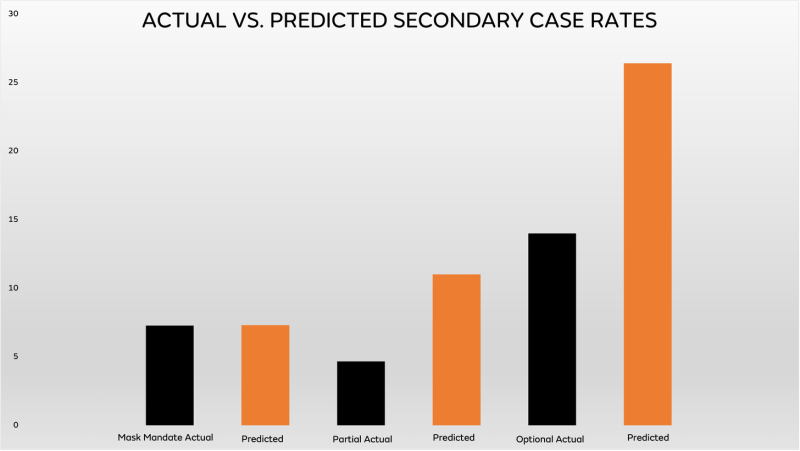

All primary rates are in black bars, and secondary (school) transmission is in orange.

What should immediately grab your attention is the remarkable disparity between community cases among mask mandate schools and any of the other tracked rates.

It’s an enormous difference, which is why it’s important to point out that even if you assume their assertions are accurate, this could help explain some of the secondary outcomes.

And as Tracy concluded, we shouldn’t assume their assertions are accurate, because wildly different contact-tracing policies could be to blame.

It’s also vital to note that the lowest rate of secondary transmission was not in the districts with mask mandates, but was found in those that were partially masked, which is defined as districts that changed their mask policies during the study period. You’d think this monumental policy change and the inevitable “confusion” teachers’ unions are so concerned about would lead to the worst outcomes, but they had the best results.

Also of note is how little transmission actually occurs in schools, assuming that their contact tracing is accurate and performed correctly. No matter which cohort you examine, school transmission is minimal. Schools should never have closed and those that did should have been opened immediately, a crime that will undeniably cost humanity for years.

Finally, the study press release dramatically states that mask mandates were associated with 72% lower case rates during the Delta variant era. However, even a cursory glance at the secondary outcomes doesn’t show a 72% lower rate for masked schools.

The reason for this is they didn’t use the raw case rates, but “predicted” rates.

Predicted Case Rates

Yup. It’s a model.

To estimate the impact of masking on secondary transmission, we used a quasi-Poisson regression model.

They estimated it.

And looking at the difference between the actual case rates and the “predicted” modeling estimates shows how they reached 72%:

Boy that sure looks different doesn’t it? When you place the actual rates in black and the adjusted rates in orange, you can see how they arrived at their headline.

The mask mandate school rate doesn’t change, but the partial and optional rates certainly look different, don’t they?

Suddenly, the best performing schools weren’t from partial masking districts and the rate in mask optional schools nearly doubled, from 13.99 to 26.4.

Now you see why they used a model.

The confidence intervals of their model are equally laughable:

- Universal: 6.3-8.4

- Partial: 6.5-18.4

- Optional: 10.9-64.4

10.9-64.4! How did they release this with a straight face? It’s completely absurd. It’s beyond absurd.

And again, it falls prey to sample size issues. Here are the total number of secondary infections per cohort:

- Universal: 2,776

- Partial: 231

- Optional: 78

That’s correct, this entire study comes down to 78 cases in optional masking districts, out of 1,269,968 individuals tracked in the study.

With numbers like that, it’s easy to understand why their confidence intervals are ridiculously large.

Oh, and those cases aren’t separated out by student or staff, so we have no idea how the transmission patterns worked; for example, if staff mostly transmitted to other staff.

This study is patently absurd and egregiously useless.

In theory, the goal was laudable: trying to determine community and school transmission and attribute it to different masking policies.

In practice, it’s a complete farce.

The sample sizes are woefully unbalanced. A potentially fatal flaw in contact tracing was ignored, due in large part to the CDC’s absurdly incompetent guidance. Numerous other confounders are mentioned in the study documents, which no one will read. The actual rates highlight how little transmission (potentially) occurs in schools, and showed that the best performing districts were partially masked, not fully. Assuming the community rates are accurate, the researchers also ignored that natural immunity could play a significant role in secondary transmission.

The entire analysis comes down to a grand total of 78 cases in mask-optional schools, between students and staff. And finally, and perhaps most importantly, it uses yet another model that generates unbelievably useless confidence intervals.

It is impossible to take away meaningful results from this. It cannot be used to inform policy and to continuously harm kids. Even The Washington Post is admitting that schools with fewer containment measures produced more successful students.

But predictably it is already being weaponized by the teachers’ unions to promote endless masking.

It is imperative that the public educate themselves on how much misinformation is being disseminated by activist researchers, designed to appeal to the goals and ideologies of partisan political actors.

Many children could be permanently affected by an atrocious “study” meant to promote a meaningless, destructive policy.

In a just, sane world, this study would be retracted and its champions would be forced to admit it was nonsense. But as we all know, sanity died almost exactly two years ago. And we’ll be paying for it indefinitely.